Recently, LookDeep shared the first and most transparent, peer-reviewed paper to date on Vision AI for Smart Hospitals (Frontiers of Imaging – AI). This publication offers a detailed look at how we train models, navigate labeling challenges, select architectures, structure processing flows, and achieve state-of-the-art computer vision performance (and limitations) in real-world hospital settings.

We advocate for transparency for two key reasons—balancing principle with pragmatism.

- First, we believe openness is essential for the long-term adoption and success of AI in “Smart” or “Ambient” hospitals. Without it, trust from frontline caregivers—nurses and doctors—is nearly impossible to achieve. The industry has been plagued by exaggerated demo videos and high-profile failures like IBM Watson, which have undermined confidence in AI’s potential.

- Second, we can share this level of detail because we’ve seen strong results with real hospitals and advanced well beyond the architecture, capabilities, and results presented in the paper. This progress gives us the confidence to be transparent without hesitation—a position we would likely not take if we weren’t confident in how much further we’ve pushed the boundaries since those initial results.

This update highlights aspects of our results and the remarkable new capabilities we’ve developed since then.

New Capabilities Lead the Industry

In the paper itself, we covered primarily how we analyzed sequences of images to extract objects and their interactions. Since then we have added audio and video processing models, edge and cloud-based model modalities for every use case, integration with Gemini, OpenAI, and Microsoft foundation models, integration with AI scribe and other audio processing capabilities, and much more. We have also exposed private APIs that allow partners to more directly embed our video and edge/cloud AI capabilities into their own applications.

LookDeep believes we should provide excellent video services for hospitals, a last mile platform that integrates with everything at the hospital and in the room, the ability to run edge AI and cloud AI models, and best-in-class integration to the world’s best AI capabilities from any partner a hospital would want to work with. All of the data generated from our AI capabilities is fully accessible to our hospital partners, empowering them to use the synthesized insights and/or granular AI data created to improve their models and data analysis for sepsis, clinical deterioration, care activity, and much more.

Improving on the most powerful Computer Vision for Patient Rooms Available Today

In our paper, we showed examples of the challenges of camera placement in real-world settings. Our results were not based on near perfect camera placement (image 5 below) but for AI in the real world where awkward room configurations and poorly positioned perspectives were normal. This is a large part of why our AI models work well from day one in new environments – because we built our platform to accommodate the imperfections of real-world use cases.

Representative Sample of Video Perspectives in a Hospital

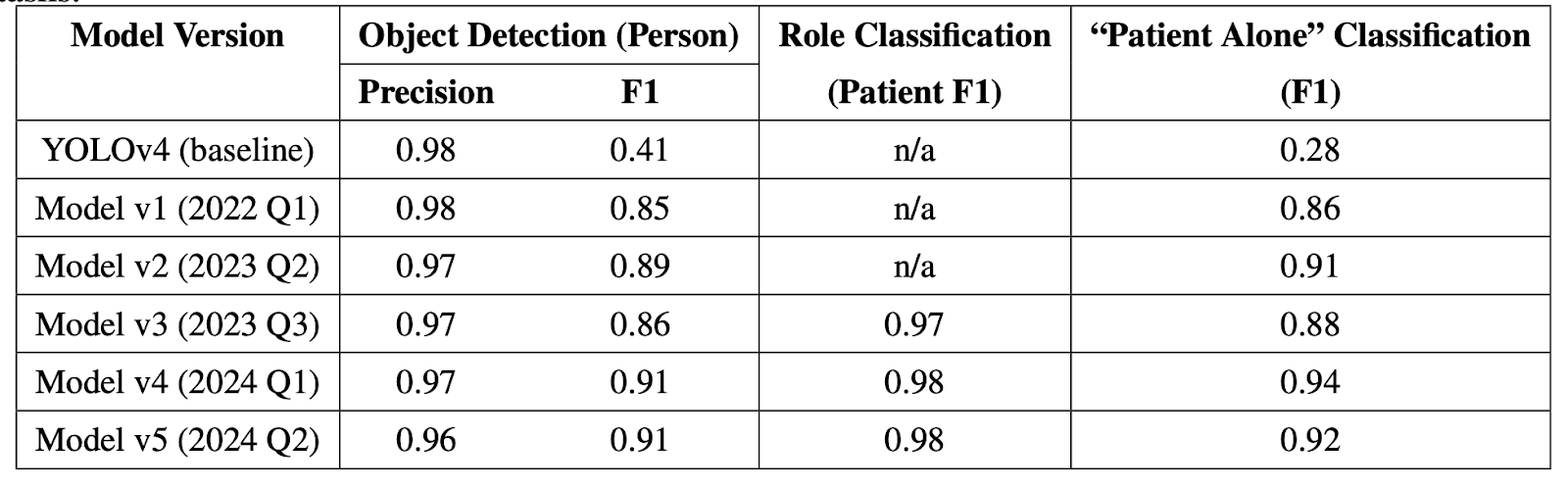

The table below (from the manuscript) illustrates how our models got successively better over time compared to the state-of-the-art object detector from when we began (YOLOv4).

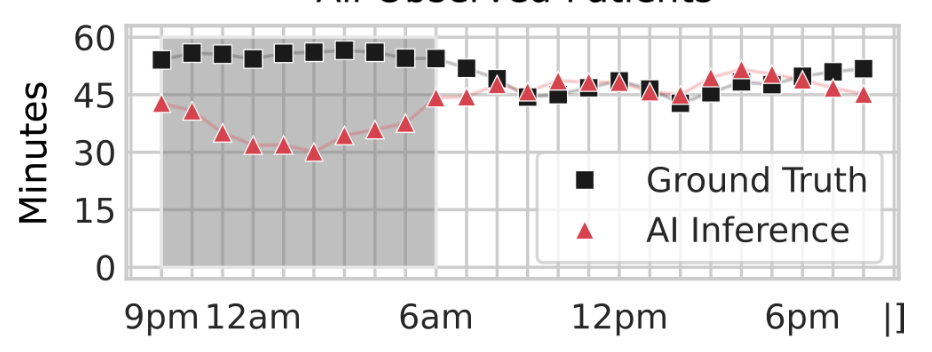

In addition, even with the above performance profile, you could see we had times when the model did not perform as well (below performance on time the patient is alone). Specifically, the combination of sensitivity to light / IR noise impacted performance at night.

Finally, models for object detection have gotten much better since the writing of the manuscript (e.g. YOLO v8 through YOLO 11 are substantially improved in both speed and accuracy).

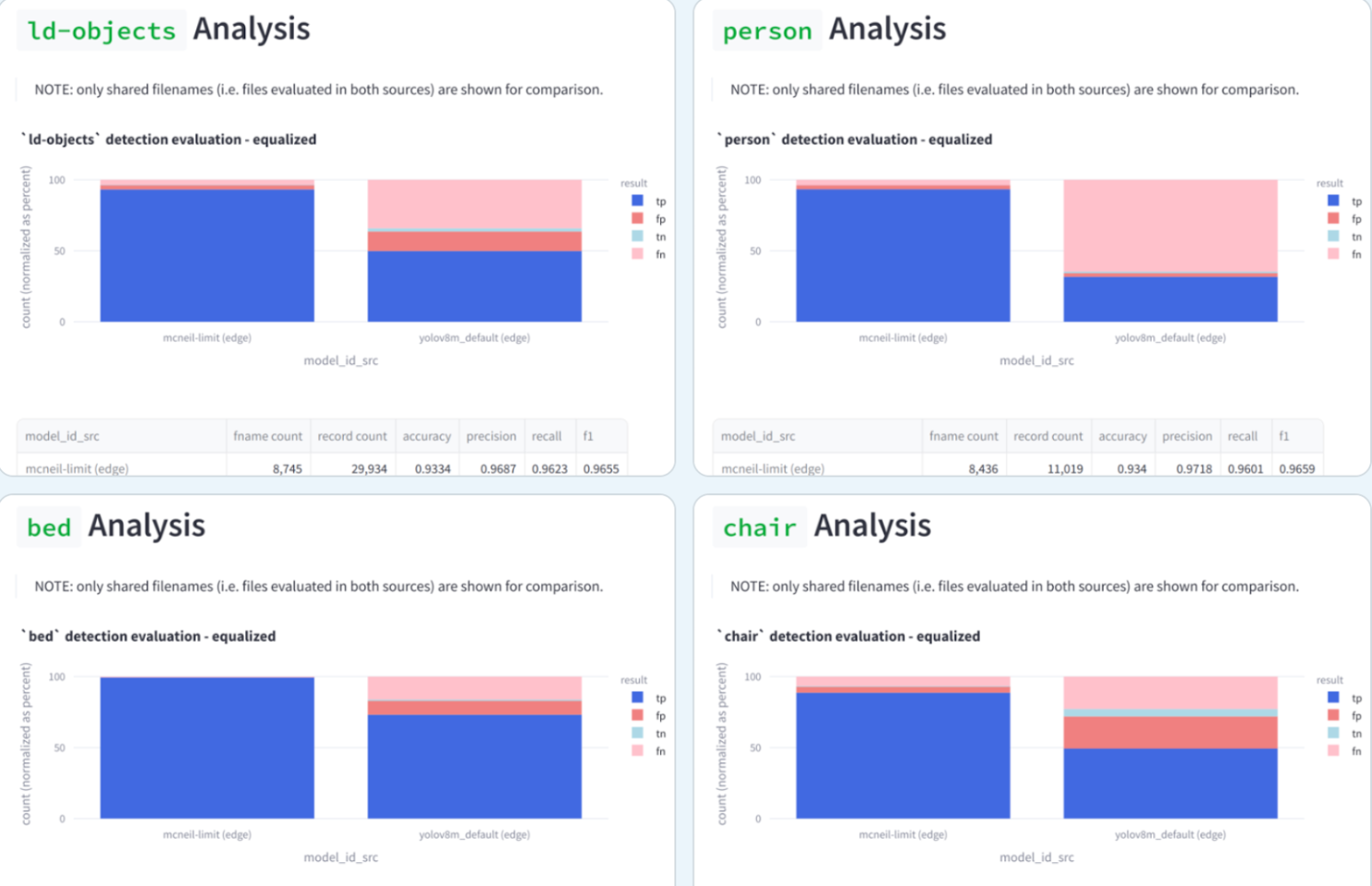

Today, we are thrilled to announce we have far surpassed these benchmarks. Below you can see how our recent models compare to the most recent YOLO models in the domain of detection in the hospital.

Now all of our major object types have F1 scores above .95 (beds and people are even higher) and it works without a major drop off of these levels at night (i.e. with less than ideal light / IR conditions). In addition, variance of performance across hospitals has diminished and the model runs substantially faster and more efficiently (allowing for more edge AI capabilities to run).

LookDeep Object Detection Comparison in Hospital Rooms

A New Era of Foundation Models and Capabilities

We will always have a need for AI on the Edge. Cost, performance, security, latency, poor connectivity – these reasons and more make it a reality. However, we all see the incredible rate of innovation across the industry. We view one of the most important functions of our platform to be a secure and trusted gateway that connects that innovation into the hospital room.

In hospitals today we are testing and previewing that future. Here are just a few of those capabilities.

- Live two-way real-time translation using OpenAI – Translate to and from a patient’s language in real time with generated audio for more than 50 languages. Eventually, regulatory updates and technical validation will mean hospitals can save 90% over their existing translation costs and make it available immediately to any patient and staff at any time.

- Using Gemini to identify common nursing actions from video – Staff can see if a patient was helped out of bed, turned, went through PT, and much more.

- Passing audio of patient / staff interaction to leading AI scribe solutions – Staff can ambiently activate their preferred AI scribe company (“get _____ scribe ”) and move into this workflow without any additional device in the room.

- “Time Machine” to understand critical moments – Virtual team members can take specified actions (hit the alarm button if the patient cannot be reasoned with) and the system can obtain and analyze data from that critical moment and what led up to it (e.g. give me a minute before the event to until a staff member entered the room).

We have several exciting announcements we will preview and launch in early 2025. Our platform allows us to quickly incorporate and build new capabilities – but the testing and iteration to understand potential edge cases, and optimal collaboration with staff in the real world are necessary steps even to enter preview with these features.

Because it can see and hear, AI is the first technology of our lifetimes that can truly transform patient care in hospitals. We want to safely and transparently make that a reality for patients and those who care for them in the hospital. We look forward to continuing to share more in peer-reviewed presentations and papers, though those will always be a look back. Going forward we will also share more hands-on product demonstrations and evidence-centric public AI announcements.

Data and Demos

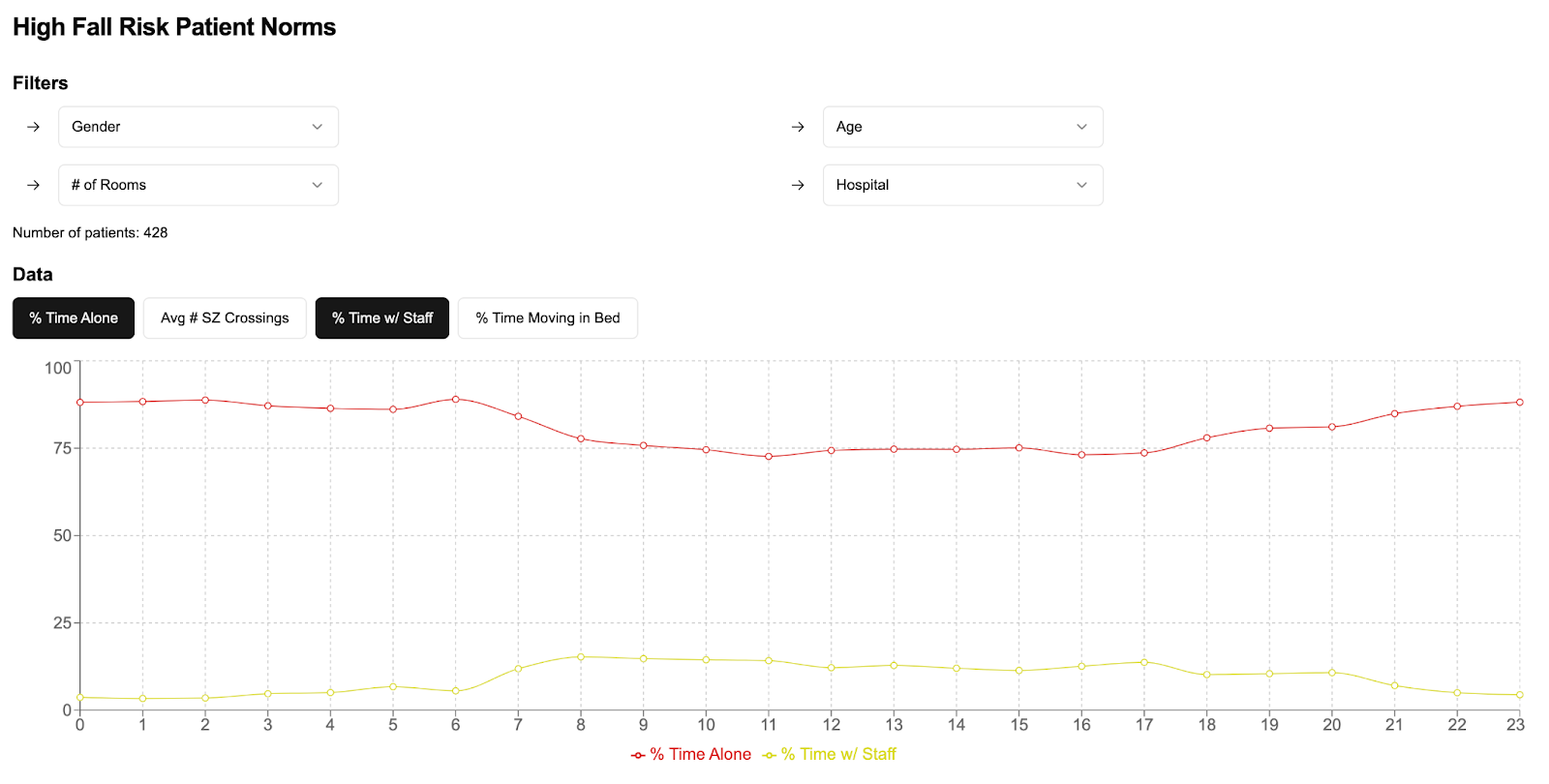

We have also shared more on the norms of patients in the hospital. See here and below to examine differences in how patients spend time across the day, by gender, age or hospital location. For a more technical view of this data and the analysis visit our github page.

https://lookdeep.vercel.app/